Research Study: Generating Plain Language Summaries with Medically Tuned AI

The Advantage of Medically Tuned AI in Plain Language Summaries

In the rapidly evolving landscape of medical communications, the ability to translate complex scientific data into accessible content is paramount. Sorcero conducted a study with our partners to demonstrate that bespoke generative AI (BAI) technology tuned for medical and scientific content significantly reduces the time and effort required by professional medical writers to develop Plain Language Summary (PLS) abstracts. By leveraging tailored AI tooling, organizations can drastically improve content readability and population accessibility without sacrificing the clinical accuracy essential for scientific integrity.

This research was conducted as a collaborative effort involving experts from Sorcero, UCB Pharma, and Lumanity. The study's authors sought to address the critical gap in scientific accessibility for lay populations.

The findings were originally showcased as a poster presentation titled "Examining the time- and effort-saving utility of tailored AI-tooling for abstract Plain Language Summary Development" at the 20th Annual Meeting of the International Society for Medical Publication Professionals (ISMPP). See the poster ->

Further formalizing this work, the research was published in JAMIA Open in April 2025, underscoring its clinical and technical significance in the field of medical informatics.

Read the research on JAMIA Open ->

The Challenge: Overcoming Accessibility Barriers in Scientific Communication

Traditional scientific PLS often fail to fulfill their intended purpose. Studies have shown that these summaries remain difficult for lay populations to comprehend without a medical education, which severely limits their accessibility. To address this gap, research was conducted to test a BAI process specifically designed to enhance efficacy in generating PLS abstracts for non-specialist audiences.

Study Objectives: Quantifying Time, Effort, and Accuracy

The primary objective of this research was to quantify the time and effort savings afforded by a proprietary BAI process in the development of PLS abstracts by professional medical writers (PMW).

Secondary aims included:

-

Assessing output quality through Subject Matter Expert (SME) evaluation.

-

Determining the suitability of the summaries as a discussion vehicle for Primary Care Physicians (PCPs) and their patients.

-

Measuring the overall reading level and accessibility of the output.

Methodology: Evaluating Manual, Standard AI, and Bespoke AI Workflows

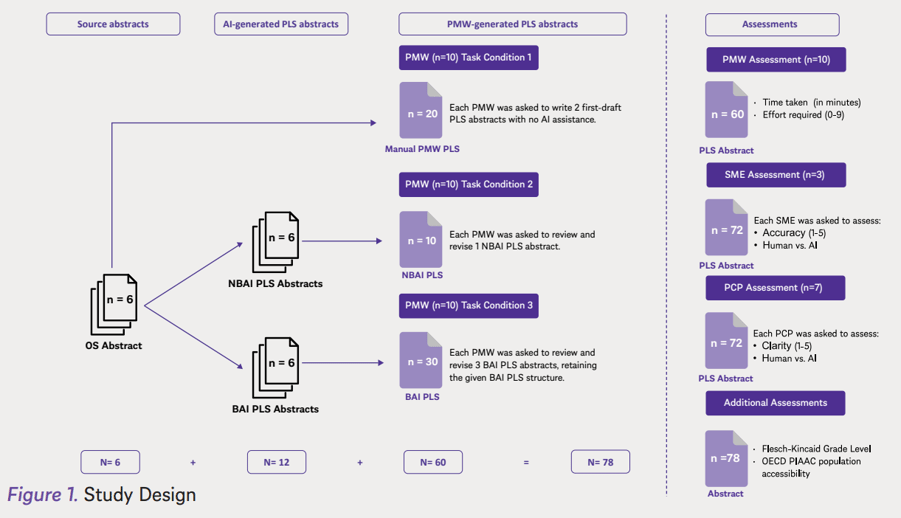

The study involved 10 professional medical writers (4 US, 5 UK, 1 France) who developed PLS abstracts for six scientific articles in the fields of neurology and rheumatology. The writers worked under three distinct task conditions:

-

Manual (Condition 1): Writing PLS abstracts from scratch based on original scientific (OS) abstracts without any AI assistance.

-

Non-Bespoke AI (Condition 2): Manually reviewing and editing abstracts generated by a publicly available, standard AI interface (NBAI).

-

Bespoke AI (Condition 3): Reviewing and editing abstracts generated via a multi-stage BAI process utilizing proprietary LLMs and task-specific coding.

The final outputs were evaluated by 3 SMEs for accuracy and 7 PCPs (3 US, 4 EU) for clarity using Likert rating scales, with all reviewers blinded to the development process and randomly assigned.

Efficiency Results: Achieving a 41% Reduction in Completion Time

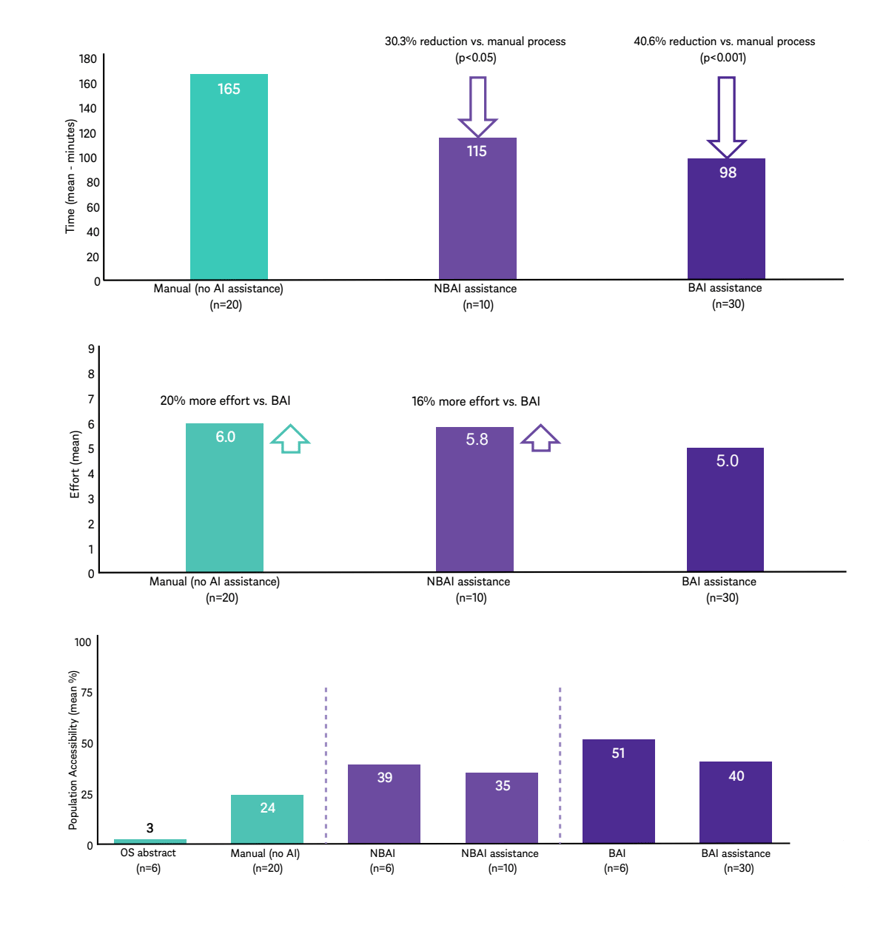

The implementation of BAI led to dramatic productivity gains for the medical writing team:

-

Time Savings: There was a 41% reduction in completion time for BAI-assisted summaries compared to the manual unassisted process (p<0.001).

-

Reduced Effort: Both manual and standard NBAI processes required significantly more perceived effort than the BAI process (20% and 16% more effort, respectively).

-

Lower Revision Burden: The mean Levenshtein distance—a measure of required edits—was lower for BAI (1570) than for NBAI (1780), meaning writers had to make fewer manual changes to reach a final draft.

Expert Validation: Accuracy and Physician Suitability

Subject Matter Experts and Primary Care Physicians validated that efficiency did not come at the cost of quality:

- Clinical Accuracy: SMEs found that BAI-assisted summaries reflected the original scientific abstracts more accurately than manual human-written summaries .

- End-User Clarity: PCPs rated BAI-developed abstracts as the most effective vehicle for explaining research findings to patients.

- Human-Like Quality: SMEs and PCPs struggled to identify the correct development process, correctly guessing "human vs. AI" only 56% and 61% of the time, respectively.

Readability Analysis: Achieving the 6th-Grade Standard

Subject Matter Experts and Primary Care Physicians also validated that efficiency did not come at the cost of quality. In fact, readability increased:

- Ease of Reading: AI-assisted PLS abstracts were consistently easier to read than those written manually.

- Unrevised Performance: Unrevised abstracts generated through the BAI process were found to be the easiest to read of all tested versions.

- Broad Understanding: BAI summaries were understandable to a larger percentage of the population than both the original scientific abstracts (17x) and the summaries written manually by medical writers (2.1x).

Conclusion: The Strategic Value of Medically Tuned AI

The research concludes that augmenting the medical writing process with bespoke AI tooling saves significant time and effort for professional writers. Crucially, these gains are achieved greater readability and population accessibility without any degradation of accuracy or clarity.

For scientific communications organizations, this represents a superior alternative to both standard non-bespoke AI and traditional human-only workflows, providing a scalable way to deliver fit-for-purpose scientific summaries to patients and the broader public.

Study Contributors

This study was a collaborative effort between experts in medical communications, AI development, and pharmaceutical research. The following individuals played key roles in designing, executing, and analyzing the study:

| Sorcero | Lumanity | UCB |

|

|

|

Their combined expertise ensured a rigorous, multidisciplinary approach to evaluating the impact of AI on plain language summary development.

41%

Faster

with Bespoke AI compared to manual writing

17x More Accessible

With Bespoke AI compared to original source

![]()

Medically tuned, bespoke AI saves medical writers time and effort in developing plain language summary abstracts and helps them be understood by a broader audience without any degradation in accuracy.

Walter Bender

Chief Scientific Officer

Poster Presentation at the 20th Annual Meeting of ISMPP

See the study published in JAMIA Open.