We've got a question:

Do you think you can tell the difference between an immuno-oncology summary written by a human Subject Matter Expert (SME) and an AI algorithm?

Here's the experiment. We have 3 summaries. Two were written by subject matter experts with decades of industry experience and one was written by our AI algorithm. Do you think you can tell the difference?

What type of experiment is this?

Benchmarking AI Summaries: What's our Approach?

Artificial intelligence opens the door to delivering quality automatically generated summaries to Life Sciences. AI summaries can accelerate workflows, uncover insights, and reduce time to review dramatically. They can help experts review massive volumes of literature and focus on the points that matter most.

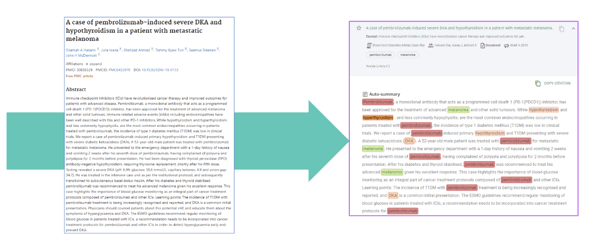

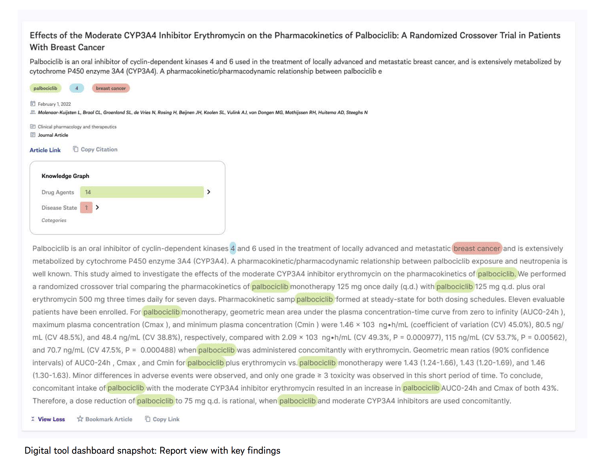

>> See how it works: Get introduced to our new Auto-summary v1.80 and see how the Clarity platform generates auto-summaries of abstracts and full-text articles.

But, the quality of a summary can be hard to judge. In general, it's subjective.

So, to benchmark the performance of our Sorcero AI-generated summary, we’re testing the hypothesis:

A sufficiently “good” AI-generated summary will be indistinguishable from that produced by a human expert.

One way to measure this is through a Turing Test.

What's a Turing Test?

The Turing Test, invented in 1950 by Alan Turing, an English mathematician, logician, and cryptanalyst, is still a primary AI tool despite being 70 years old.

The original Turing Test requires three terminals. Each is physically separated from the other.

One terminal is operated by a computer, while the other two are operated by humans.

.png?width=400&name=4%20Steps%20for%20(4).png)

During the test, one of the humans functions as the questioner, while the second human and the computer are the respondents.

The questioner interrogates the respondents within a specific subject area using a specified format and context.

After a preset length of time or number of questions, the questioner is then asked to decide which respondent was human and which was a computer.

The test is repeated many times.

If the questioner makes the correct determination in half of the test runs or less, the computer is considered to have artificial intelligence because the questioner regards it as "just as human" as the human respondent.

💡Do you think you can pass our Turing Test?

What is the value of AI Summaries for Medical Affairs?

Auto-summaries use AI to generate shorter versions of text that contain the most essential information.

At Sorcero, we help Medical Affairs generate summaries from complex biomedical literature, trials, and content.

What are the benefits of AI Summaries?

In real-world use cases, one key benefit of auto summaries of medical content is saving time.

When monitoring large volumes of medical literature, effective summaries can accelerate decision making on whether to review an article and can reduce review time by more than 60%. They can help Medical Affairs get to the most important information - and act on it - much quicker.

The role of Medical Affairs professionals is just as key as ever.

AI summaries can save Medical Affairs time - but they are here to support, not replace, the human expert. Medical Affairs expertise is critical to driving strategy and making key decisions.

Take a virtual tour of the Clarity platform to see auto summaries in action

Testing out AI-generated summaries: Our Methodology

In a similar prior experiment, we asked two SMEs to produce summaries of 25 abstracts from academic articles in the field of immuno-oncology from PubMed. These are the same SMEs who produced the summaries for our interactive Turing Test [Take the test]

Meet the SMEs:

- SME 1 holds a MSc in Pharmacy and PharmD in Pharmacoeconomics, with 10+ years professional experience in Medical Affairs, clinical development, and digital transformation with a focus on patient care.

- SME 2 is a PharmD with 17+ years of experience in field Medical Affairs or field-trainer roles. Experience includes supporting drugs and drug devices in gastroenterology, cardiology, endocrinology, respiratory, immunology, urology, oncology, infectious disease, pain management, and rare diseases.

About the AI Summaries:

For this first experiment, we also generated summaries for the same abstracts using two AI algorithms.

- Approach 1: Use the Sorcero AI summarization service

.gif?width=500&name=Product%20Video%20(4).gif)

➡️ See how the Sorcero Clarity platform generates auto-summaries from medical and scientific literature to help reduce review time by 88% - Approach 2: Use a publicly available, general-domain summarization service (TLDR This)

Both the authors and the algorithms met the same extractive criteria to build their summaries:

- Both the algorithms and authors were only allowed to use sentence(s) from the original abstract

- The sentences could be placed in any order

- The summaries must contain a maximum of 60% of the words in the original text

Our Evaluation

Once the summaries were made, we asked five evaluators to assess the summaries.

Meet the evaluators:

- Expert 1 holds an MSc in biology, majoring in physiology and biochemistry, BSc in biology, and an MBA, majoring in marketing research and data analysis; 5 years of experience in the pharmaceutical industry.

- Expert 2 holds a BSc in biotechnology, with a focus in pharmacology and drug design; research experience in designing nanoparticles as an anti-cancer agent and in estimating the role of BPA in cervical cancer.

- Expert 3 holds an MPhil degree in molecular biology and a BSc degree in biotechnology; research experience in stem cells.

- Expert 4 holds an MPhil degree in industrial biotechnology and a BS degree in applied biosciences; master’s thesis was focused on devising a vaccine design against colorectal cancer.

- Expert 5 holds a postgraduate certificate in biochemistry and clinical pathology along with a BSc in biochemistry.

The “Turing Test”: Part One

For this experiment, we asked the evaluators to determine which 1 of 3 summaries was computer-generated.

First, the original title and abstract of each of the 25 articles were given to the evaluators.

They were also presented with 3 of the 4 summaries.

- Two SME-written summaries were always included.

- One of the machine-generated summaries was selected at random.

The presentation order of the articles and summaries were both randomized.

For each set of summaries, the evaluators were asked to select the summary that they thought was machine-generated.

Results: The Turing Test - Part One

In this Turing Test, we asked each evaluator to select one summary out of three that they thought was generated by an AI. We gathered 125 data points (5 evaluators x 25 abstracts).

The Sorcero AI-generated summaries were only considered to be AI-generated about 20% of the time.

This means that 80% of the time - the evaluators believed that a human SME wrote the Sorcero AI summaries.

Sorcero AI was considered to be more “human” than even the summaries by SME 1, which were ranked highest in an earlier phase of our experiment.

The Turing Test - Part Two

During the DIA 2022 Global Annual Conference in June 2022, we presented one set of summaries to 36 different (SMEs) subject matter experts during our Content Hub presentation.

Two summaries were written by the same SMES, and one was written by the Sorcero AI algorithm. We asked them to decide which summary was written by the AI. Here are the results:

- Sorcero AI Summary: 11 votes

- SME 1 Summary: 13 votes

- SME 2 Summary: 12 votes

This means that the AI summary was chosen the least amount of times as computer-generated.

The balanced results argue that the means of generation – human vs machine-generated – would not have a noticeable impact on usage.

Can you pass this Turing Test? Try it out here.

Looking at the Results:

The Sorcero AI-generated summaries exceeded the performance of one SME along most measures.

Also, the variance among the SMEs exceeded the variance between the Sorcero AI-generated summaries and some of the SMEs.

The hard target of 60% used in the study meant that some summaries were longer than necessary and some left out important information. Giving some flexibility to the summary length would allow the AI to do a better job of surfacing necessary and sufficient information.

These results highlight the value of AI-generated summaries as a tool for helping SMEs manage large volumes of medical literature.

Sorcero AI is also used to summarize other types of content, including medical insights and clinical study reports.

This is supporting better decision-making across Life Sciences.

>> If you'd like to share this Turing Test, you can download it as a PDF here.

💡 Learn more about how auto-summaries can save your Medical Affairs team time and streamline decision-making. Take a tour of our Clarity platform and see how it works.

%20(3)-1.png?width=300&name=Blog%20Headers%20(1200%20%C3%97%20600%20px)%20(3)-1.png)