For the past decade, the life sciences industry has feverishly awaited the breaching floodgates of artificial intelligence (AI).

It’s trickled its way down into various workflows, but many still lay in wait for the onrush of transformation.

AI brings promises of innovation, speed, and untapped potential. It can be easy to get swept into the tides of its possibilities, as well as the jargon.

For many, in the midst of all the hype, a clouded mist has veiled the technology. Claims by vendors and media outlets can be misleading or vague, making it increasingly difficult to align practical applications with the technology.

Even so, many subject matter experts (SMEs) are eager to learn about AI. They are ready to better understand how it can be used to augment and accelerate life sciences workflows.

At the same time, it can be challenging for non-technical experts to separate the AI signals from noise. Workloads are already at all time highs, so acquiring an in-depth understanding of AI in order to partner with IT to implement solutions can be particularly challenging, if not futile.

Gaining a core understanding of AI is a valuable step forward.

Equipped with a foundational knowledge of AI empowers SMEs to feel more confident approaching the technology. It facilitates deeper conversations with IT. It builds trust. It provides a toolkit to raise flags as needed. It supports the integration of flexible, adaptive solutions that recognize each life sciences organization is not fit for a one-size only approach.

>> Read the guide: 4 Tips for Implementing New Technology in Medical Affairs

Where should life sciences experts get started with AI?

In this article, we’ll go back to beginning to bust some common misconceptions about AI. We’ll discuss 5 common myths about AI in the life sciences - and break down the reality.

Let’s take it all the way back to the start.

Myth 1: Artificial intelligence is a new field.

Reality: Roots of artificial intelligence can be traced back to antiquity and its philosophers. The earliest recording of cracking simple substitution ciphers dates back to the 9th century.

With centuries of advancement in between, by the 1950s, AI was a discipline. The term “artificial intelligence” was coined in 1956 by AI pioneer John McCarthy, during the notable Dartmouth Summer Research Project on Artificial Intelligence (DSRPAI).

Another step forward came in 1950 with the paper Computing Machinery and Intelligence, in which Alan Turing introduced the “imitation game,” what later became known as “the Turing test.” The Turing test is completed between a human interrogator and two unknown entities - one human and one computer.

If after a considerable amount of time, the interrogator could not tell the difference between the machine and human, the machine was deemed as intelligent. This test is still used today as a benchmark.

.png?width=500&name=4%20Steps%20for%20(4).png)

Since the 1950s, the course of AI’s development has taken turns, experiencing both setbacks and progress. In recent years, some of the most drastic progress has been the product of a lot more computer power. Decades ago, there was little memory and computing power to run the algorithms on the massive data sets we can now.

In 2018, another notable breakthrough came from the Google AI Language Division’s publishing of BERT, “Bidirectional Encoder Representations from Transformers.” BERT is a pre-trained algorithm capable of accurately gauging the context of each word in a sentence.

One in a long string of representations of language, BERT uses various techniques, such as the ability to look forward and backward across the passages, to create an optimal representation of language. Pre-trained BERT models are open source and publicly available, meaning that anyone can take advantage of it and build upon it.

For many of us, artificial intelligence sounds closer to a futuristic science fiction movie than the here and now. The truth is, it’s built on years and years of research and advancement.

Myth 2: AI, ML, and NLP are the same.

Reality: The terms artificial intelligence, machine learning (ML), and natural language processing (NLP) are often used together. So, for many of us, they tend to blend together.

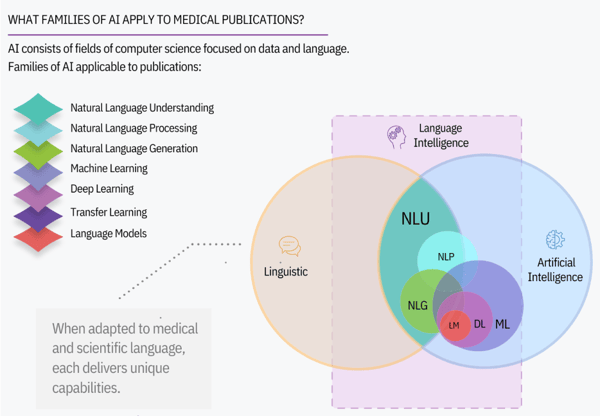

The truth is - artificial intelligence is an ensemble of agents. It uses a variety of linguistics and statistical models to look at a data set and draw inferences. AI consists of families, including NLP. Each family of AI provides a key layer to solving the knowledge extraction challenge for novel use cases.

When adapted to medical and scientific language, each delivers unique capabilities.

How can these families of AI be applied to medical language? Here are 3 examples:

- Natural Language Processing: Used to annotate medical text

- Machine Learning: Used to automate heterogeneous content ingestion

- Natural Language Understanding: Used to drive deeper concept understanding

Language Intelligence, what we use here at Sorcero, takes these families of AI and applies them to present solutions to the unique challenges of the life sciences.

Myth 3: In the Life Sciences, we can use public ontologies alone.

Reality: Public ontologies are a great resource for the life sciences. But, there are some key reasons to not fully rely on them.

1. They are vast and un-targeted.

2. They are incomplete, and in many cases, fail to represent specific needs or your internal IP.

For example: When developing new drugs in clinical trials, the terms have not yet reached public ontologies, and as such, cannot be tagged.

3. They reflect domains, not applications. While ontologies carve up the world into meaningful Concepts and Relationships, how they divide up the world can be very different.

For example: When asking ‘what is cancer’ from DOID and LOINC, we get two very different views.

4. They are neither infallible nor consistent.

For example: LOINC sees cancer as a demarcation of a type of patient; DOID sees it as a disease. Each is correct within a specific context. However, if, for example, your interest is in the types of neoplasm, neither addresses the correct level of granularity.

NCIT defines cancer as a synonym of the actual concept Neoplasms.

So, what’s the solution? One approach is to use an “Ontology Studio.”

An “Ontology Studio” can match the granularity of your knowledge base to your worldview to keep your product accurate, relevant and useful.

💡 How AI is Transforming Medical and Regulatory Affairs in 2022? Download the free 10 page white paper

Myth 4: Large language models can fully meet the needs of the Life Sciences.

The life sciences move very fast linguistically. Research codes, new product names, new treatments, and new variants are coined at an incredible rate.

Large language models, like GPT-3, and BERT—including BioBert and SciBert—and their predecessors, such as GloVe and Word2Vec, cannot keep pace with rapid linguistic evolution in the life sciences.

There will always be words that they have never been exposed to, and, as such, are unable to meaningfully represent. When faced with unknown concepts, LLMs fail in unpredictable ways, which undermines trust.

How can we deal with “out of vocabulary” words in the life sciences?

A neurosymbolic approach, which combines rules-based approaches with deep-learning techniques, can improve the resilience of any off-the-shelf models, regardless of their size and complexity, explain Sorcero Senior Scientist Walid Saba and Lead NLU Engineer Adam Tomkins.

Let’s look at an example:

Statement: “The patient has been in contact with BA.4”

- The human approach to decoding is to try to understand the meaning of each term. We may ask, “What is BA.4?”

- In contrast, the language-model approach is to push on through their ignorance, leading to untrustworthy behavior.

There are many possible answers to “What is BA.4?”, but the answer that best satisfies our requirement is ontological.

By “What”, we mean “What kind of thing is …”, not “What is the structure of…” or any other valid, but unhelpful interpretation.

Situations like these can arise in many workflows, including medical insight management.

🧬 How are life sciences organizations using AI to generate 300% more insights? Explore the Medical Insights Management (MIM) platform.

Myth 5: AI will replace the jobs of life science professionals.

Reality: Artificial intelligence is often seen as an “automation” solution. While there are some daily tasks in our everyday lives that can be automated, in a highly critical field like the life sciences or healthcare, automation presents many risks.

On the other hand, augmentation focuses on delivering solutions that can support, not replace human experts.

With a “human in the loop” approach, experts verify and adjudicate the decisions that AI pushes for - before they hit the end stakeholder. In the life sciences, this is essential.

The goal of any organization should be to enable SMEs to make the most of their data, knowledge base, and expertise - to not only support workers, but better patient outcomes.

So, what's next? How can organizations begin to map out use cases for practical applications of AI? Let's explore some real-world scenarios.

4 Use Cases for AI in the Life Sciences

1. Literature Monitoring

- Mining publications for personalized insights from advanced search criteria mined from patient characteristics via Natural Language Understanding

See the Real-World Case Study: The Language Intelligence approach and continuous learning delivered double-digit absolute performance improvements over other AI solutions and above human performance alone (89.3%) across all study types. This exceeded the 95% NPV threshold required for a regulatory-grade literature review solution. ➡️ See the results of the ILM case study here.

2. Pharmacovigilance:

-

First line of safety case processing

-

FDA, MHRA already underway with this

3. Regulatory:

-

Pulling analytics from unstructured data (such as submissions, health authority correspondence, etc.)

IVDR Case Study: Executing high-specificity full literature search and data extraction to powering Literary-only 505(b)2 New Drug Applications (NDA) via Natural Language Inference

>> How one of the world's largest and most innovative diagnostics companies uses Sorcero Language Intelligence to stay ahead of new EU IVD regulations: Read the IVDR Case Study

4. Medical Affairs:

-

Mining publications for personalized treatment insights from advanced search criteria mined from patient characteristics via Natural Language Understanding

>> Mapping AI to Medical Affairs Functions: Download the Free Fact Sheet

Mapping Artificial Intelligence to Your Life Sciences Workflows

Curious to learn more about how artificial intelligence can support your workflows - and better patient outcomes? Take a virtual tour of our Clarity platform, or schedule an introductory call with our team members. We'll be happy to discuss any questions or ideas you have related to AI and Omnichannel Analytics for the Life Sciences.

.png?width=300&name=Blog%20Headers%20(27).png)